When the Medical System Thinks Instead of the Physician: Where Does the Human Stand? (Eng. Programmer Aheeb Hashim Karim)

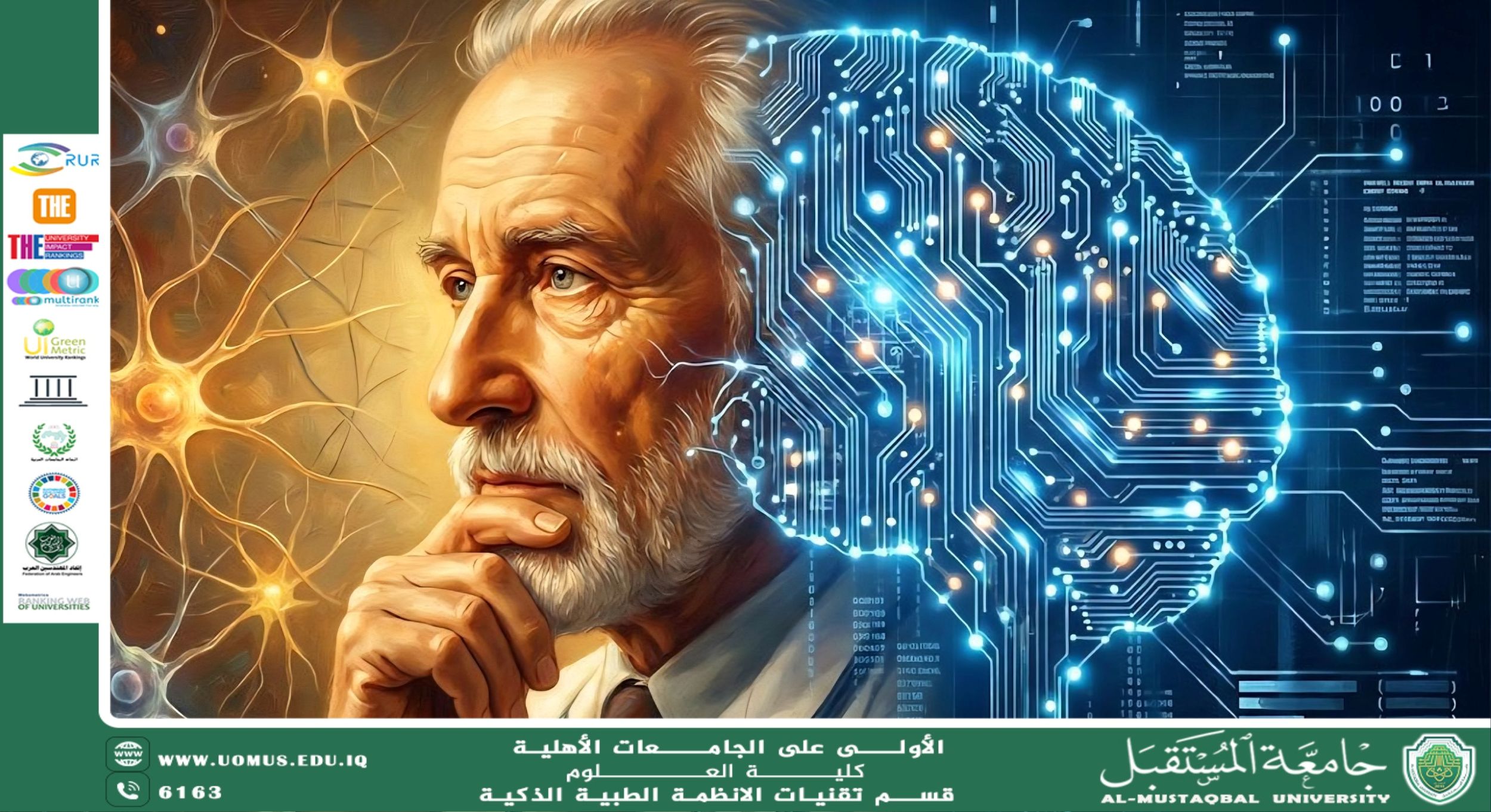

The healthcare sector has witnessed remarkable development in recent decades with the integration of artificial intelligence and smart medical systems across various stages of healthcare delivery, from diagnosis to therapeutic decision support. These systems have become capable of analyzing vast amounts of clinical data with high speed and accuracy, contributing to improved quality of healthcare services and a reduction in errors resulting from human pressure or time constraints. This rapid technological transformation has raised fundamental questions about the remaining role of physicians in an era where machines are capable of advanced thinking and analysis.

Despite the technical efficiency achieved by smart medical systems, medicine cannot be reduced solely to data processing. Medical practice is based on a comprehensive understanding of the patient’s condition, encompassing psychological, social, and cultural dimensions, in addition to building a human relationship founded on trust and communication. These aspects cannot be fully comprehended or engaged with by intelligent systems at the same depth as humans, due to their lack of consciousness and ethical awareness.

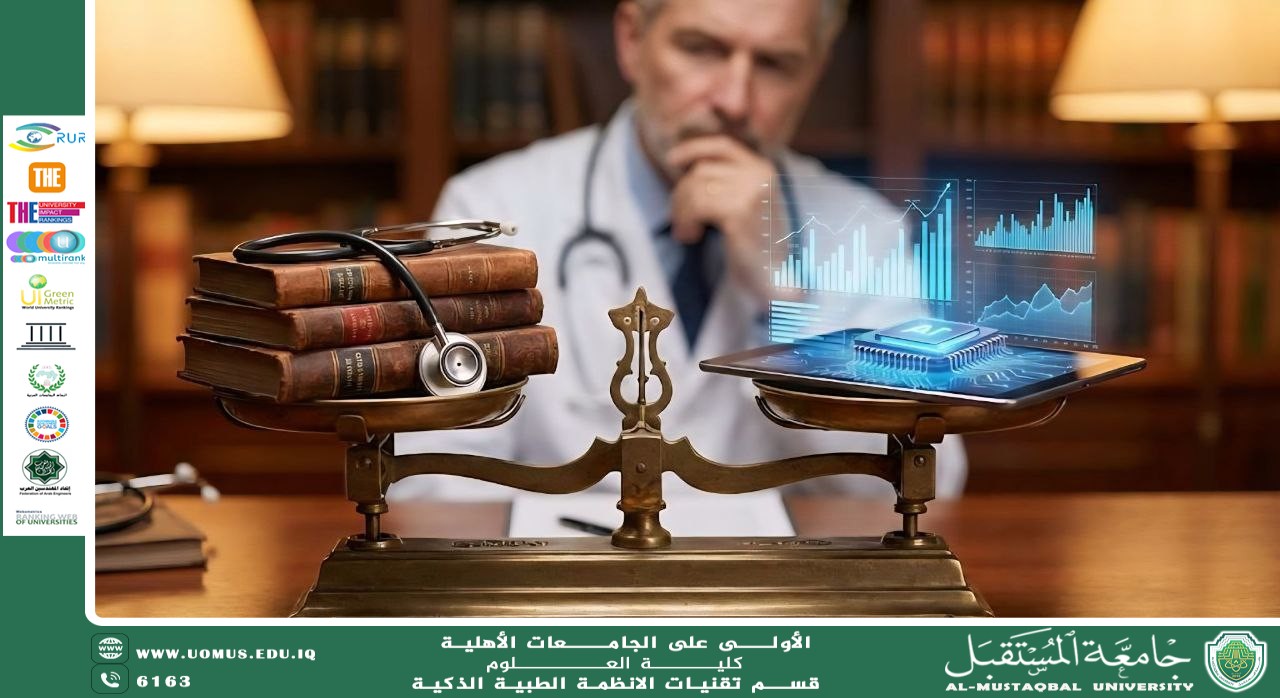

Complete reliance on artificial intelligence may lead to the marginalization of the physician’s role, reducing them to an executor of algorithmic decisions. This scenario raises ethical and legal challenges related to medical responsibility and accountability. Therefore, the safe and effective use of such systems requires continuous human supervision to ensure that outcomes are interpreted within their proper clinical context.

Within this framework, the optimal model for future healthcare lies in the integration of artificial intelligence capabilities with the physician’s human expertise. While machines enhance analytical precision, humans preserve the core values of medicine, ensuring balanced healthcare delivery that combines technological advancement with ethical responsibility.